In the rapidly evolving landscape of artificial intelligence,

Diffusion models have emerged as a revolutionary force, fundamentally reshaping the field of generative AI. From breathtakingly realistic image synthesis to innovative audio generation and complex scientific simulations, these powerful probabilistic models are quickly superseding traditional methods like GANs across a multitude of applications. This blog post delves into the core of diffusion models from a research perspective, exploring their mechanics, their superior performance, and the exciting future directions in this critical area of machine learning research.

What Are Diffusion Models?

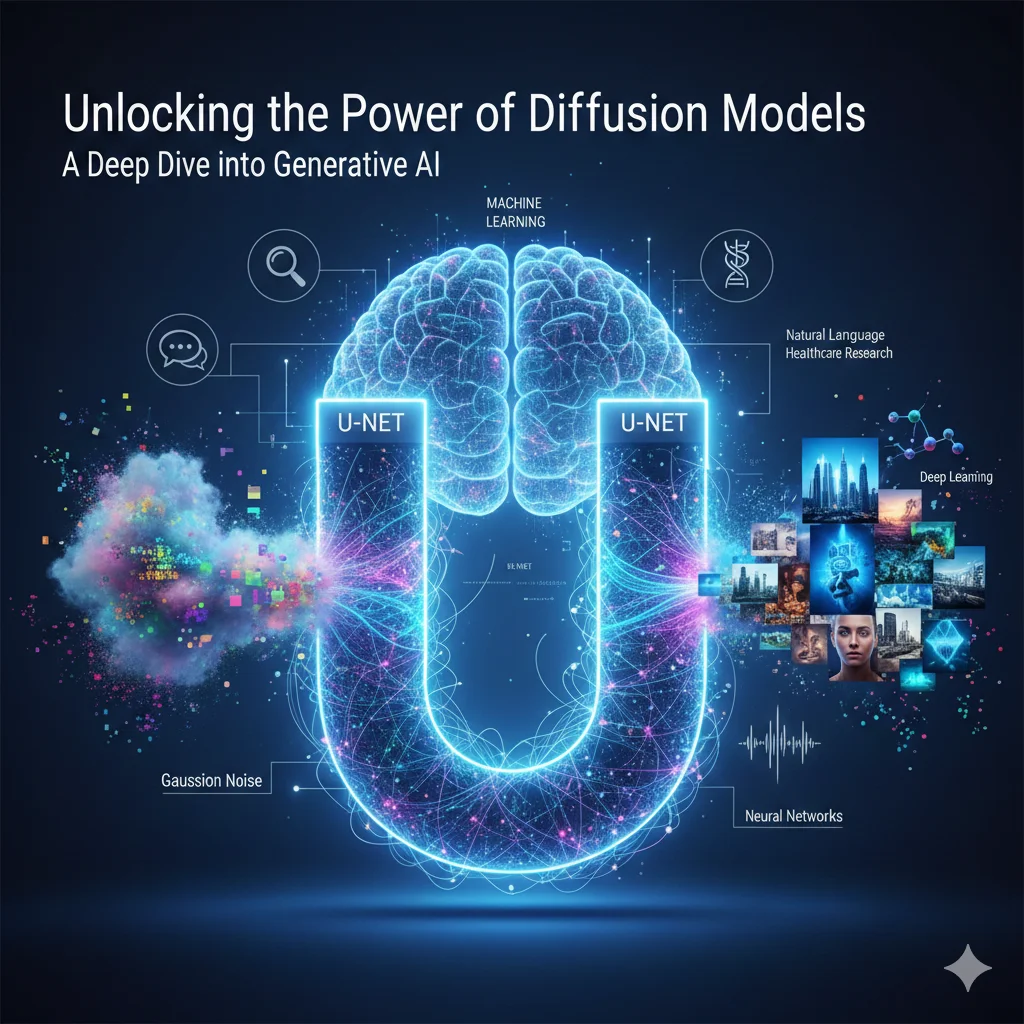

The Generative AI BreakthroughAt their heart, diffusion models are a class of probabilistic generative models designed to learn data generation by meticulously reversing a gradual noise-adding process. The concept is elegant: data is progressively corrupted with Gaussian noise until it becomes pure randomness. A sophisticated neural network is then trained to invert this "diffusion" process step-by-step, painstakingly reconstructing meaningful samples from pure noise.

Unlike the adversarial training paradigm of GANs, diffusion models leverage denoising score matching. This crucial difference leads to significantly more stable training, improved convergence, and remarkable sample diversity—qualities that have propelled them to the forefront of deep learning research. This inherent stability is a primary reason for their immense popularity within the machine learning community.

Why Diffusion Models Are Dominating Deep Learning Research:

The ascent of diffusion models in deep learning research is attributed to several compelling advantages:

-

- Superior Sample Quality for Image Synthesis and Beyond:

Diffusion-based generative models consistently produce outputs of unparalleled fidelity and realism, particularly evident in image generation tasks. Architectures like DDPM (Denoising Diffusion Probabilistic Models) and cutting-edge latent diffusion models have established new benchmarks in visual realism, making them indispensable for high-quality AI applications.

-

- Unmatched Training Stability in AI Research:

A significant drawback of GANs has been their susceptibility to mode collapse and unstable gradients. Diffusion models, conversely, largely circumvent these issues, offering a robust and reliable framework for large-scale deep learning experiments and ensuring reproducible AI research.

-

- Scalability with Neural Scaling Laws:

Recent findings underscore that diffusion models scale exceptionally well with increased data, computational resources, and model size. This adherence to neural scaling laws is a vital property for developing the next generation of foundation models in AI.

The Core Architecture Behind Diffusion Models:

The backbone of most diffusion systems is a U-Net-based deep neural network, frequently enhanced with:

- Attention mechanisms.

- Transformer layers.

- Time-step embeddings.

- Conditional inputs (such as text, class labels, or images)

These architectural refinements enable powerful conditional generation, making diffusion models perfectly suited for advanced applications like** text-to-image synthesis,** medical imaging, and sophisticated multimodal AI systems.

Diverse Applications of Diffusion Models in Machine Learning:

The utility of diffusion models extends far beyond just image creation. Their research applications now span a wide array of domains:

-

Computer Vision: Revolutionizing image synthesis, enhancement, super-resolution, and image restoration, including critical advancements in medical image reconstruction.

-

Natural Language and Audio: Paving the way for high-quality speech synthesis, audio generation, denoising, and innovative music generation.

-

Scientific and Healthcare Research: Facilitating complex tasks like molecular structure generation, advanced protein folding simulations, and intelligent** AI-driven medical imaging workflows.**

These diverse applications demonstrate the profound impact of diffusion models in reshaping applied machine learning research.

Diffusion Models vs. GANs: A Research-Driven Comparison:

For AI researchers and practitioners, understanding the fundamental differences between diffusion models and GANs is crucial:

Feature Diffusion Models GANs

-

Training Stability: :High and consistent : : Often unstable, prone to mode collapse

-

Sample Diversity: :Excellent, wide range of outputs: Can suffer from mode

collapse,limited diversity. -

Theoretical FoundationS: :Strong probabilistic basis,mathematically sound :Adversarial heuristic, less theoretical grounding.

-

Scalability: :Predictale performance gains with scale: :Less consistent, harder to scale reliably.

From a standpoint of research and reproducibility, diffusion models offer distinct advantages, which explains their rapid and widespread adoption in academic literature and AI innovation.

Navigating Current Research Challenges:

Despite their remarkable successes, diffusion models face several ongoing challenges that are active areas of AI research:

-

High computational cost during inference.

-

Slow sampling speed due to their iterative denoising process.

-

Energy efficiency concerns, particularly for large-scale deployments and sustainable AI.

These limitations are driving intense research into accelerated diffusion sampling techniques, sparse neural networks, and more energy-efficient generative AI models.

Future Directions in Diffusion Model Research:The AI Horizon:

The future of diffusion models is incredibly dynamic, with ongoing AI research focused on:

-

Developing fast diffusion samplers to reduce inference time.

-

Seamless integration with transformer architectures for even greater power.

-

Creating advanced multimodal diffusion models that can process and generate across different data types.

-

Pioneering sustainable and green AI implementations to address environmental concerns.

-

Exploring hybrid models that combine diffusion with reinforcement learning for novel capabilities.

As these research avenues mature, diffusion models are poised to remain a central pillar in machine learning and deep learning research for years to come, continually pushing the boundaries of what AI can achieve.

Conclusion: The Enduring Impact of Diffusion Models on AI:

Diffusion models represent a monumental leap forward in generative machine learning, expertly blending theoretical rigor with unparalleled practical performance. Their inherent ability to generate extraordinarily high-quality data, scale predictably with resources, and maintain exceptional training stability solidifies their position as a cornerstone of modern AI research.

For AI researchers, deep learning practitioners, and technical bloggers alike, diffusion models offer a rich, evolving, and incredibly exciting landscape—one that continues to redefine the very limits of deep learning and the boundless potential of generative AI.

Stay tuned as this transformative technology continues to unfold!