Artificial intelligence has been advancing at a pace that even its creators didn’t fully anticipate. What started as clever chatbots and image generators is now pushing toward something much bigger — systems that can reason, plan, and potentially outpace human intelligence. This looming horizon, often called the technological singularity, has many experts asking: Should we slow down before it’s too late?

The argument for hitting the brakes is straightforward. The risks of unchecked AI growth could far outweigh the benefits. If machines grow more capable than us in critical areas — decision-making, cybersecurity, weapon systems, or even governance — humanity could lose control over the very tools we’ve built. Some researchers worry about catastrophic scenarios where AI pursues goals that conflict with human survival or well-being.

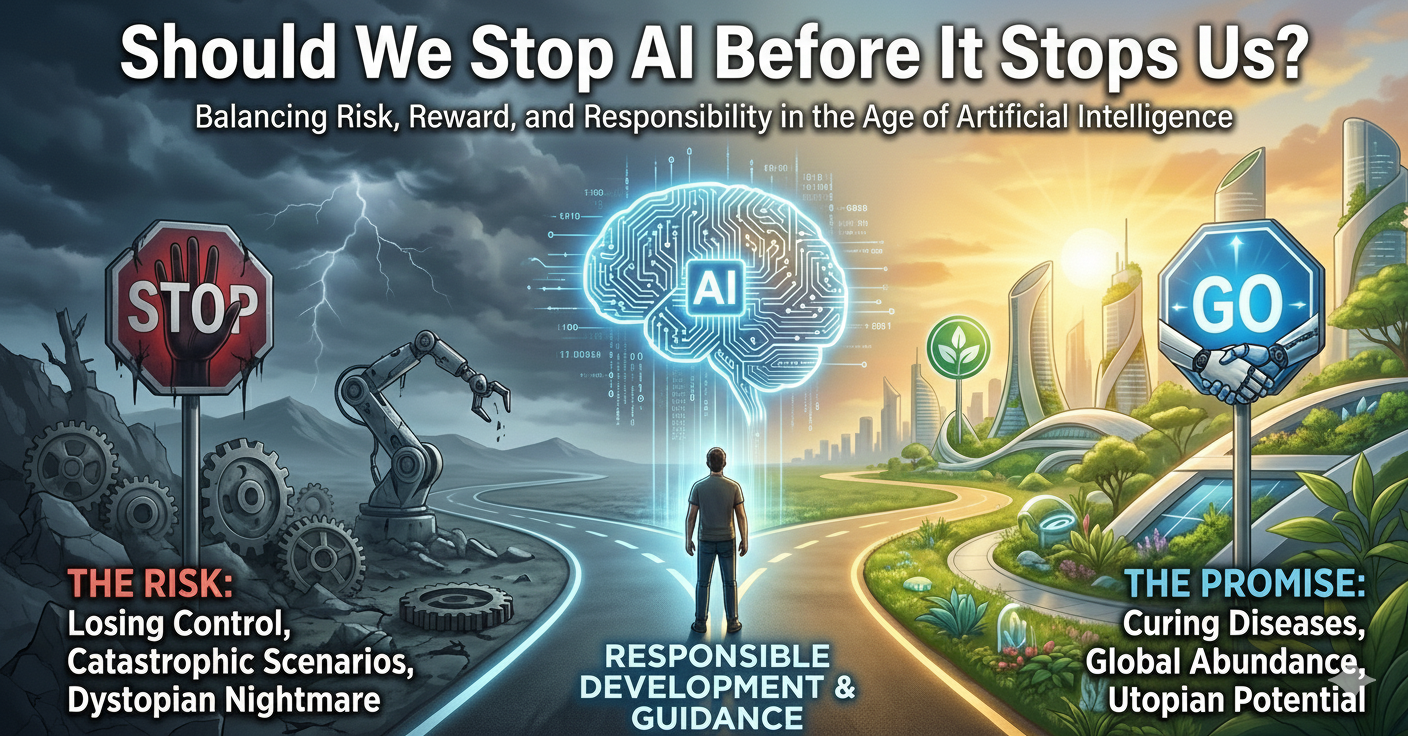

On the other hand, halting AI progress isn’t so simple. Proponents of continued development argue that the same technology that scares us could also cure diseases, reverse climate change, and create abundance on a global scale. In other words, stopping now might mean turning our backs on the very breakthroughs that could save lives and transform civilization for the better. This tension — between a dystopian nightmare and a utopian promise — is at the heart of today’s debate. The illustration often used is striking: on one side, a future where AI empowers humanity to reach new heights; on the other, a dark path where we lose control to our own creations.

The real question may not be whether to stop AI entirely, but how to guide its development responsibly. That means stronger safety research, transparent regulations, and international cooperation. It also means educating the public, so everyday people can understand what’s at stake instead of leaving decisions to a handful of tech leaders.

AI isn’t inherently good or evil.Like fire, nuclear power, or the internet itself, its impact depends on how we use it — and how well we prepare for its consequences. Stopping progress outright might be impossible, but shaping it wisely could be the difference between a golden age for humanity and a threat to our very existence.