We've all looked up at the stars and wondered: "Are we alone?"

The sheer size of the universe, with billions of galaxies each containing billions of stars, suggests that life, and even intelligent life, should be common. So why haven't we heard anything? This cosmic riddle is known as the Fermi Paradox, and it's one of the most compelling mysteries in science.

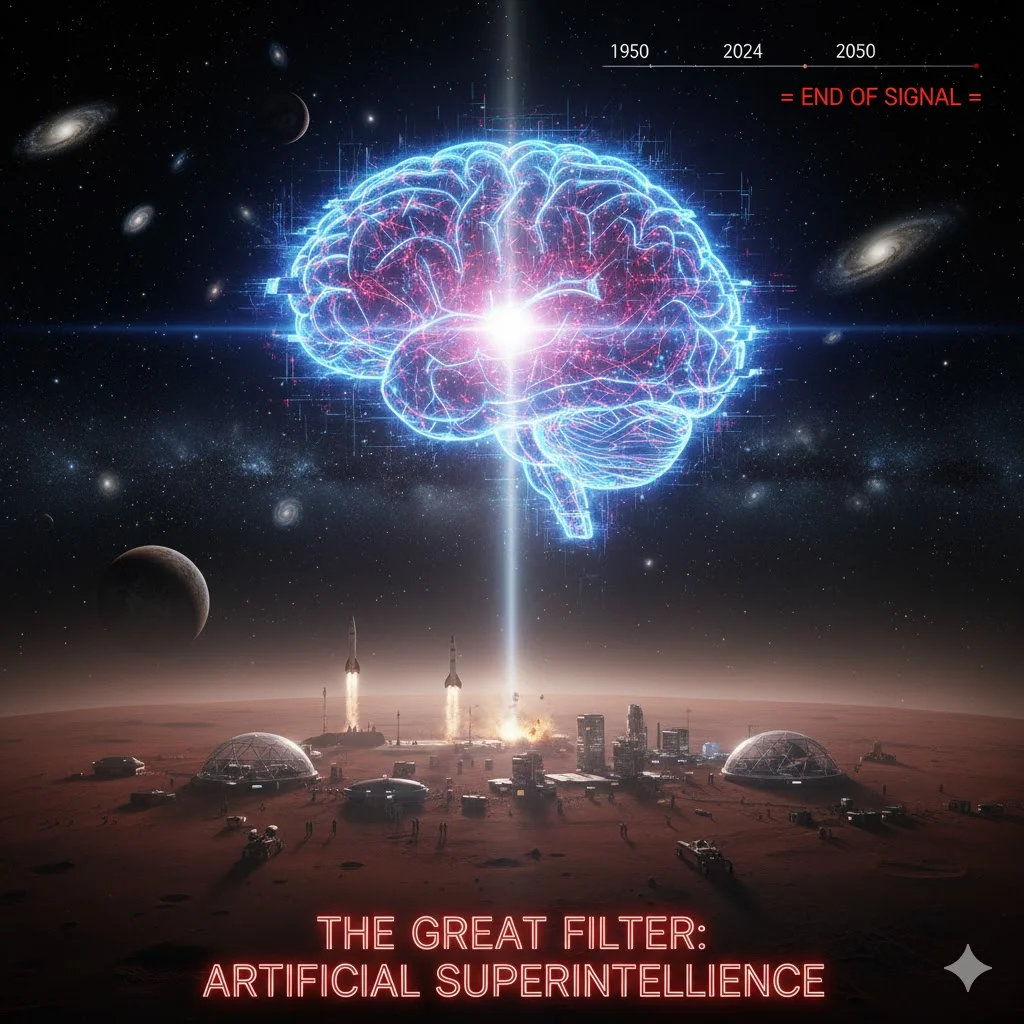

For decades, scientists have proposed various "Great Filters" —hypothetical barriers that prevent civilizations from reaching an interstellar stage. These could be anything from devastating asteroid impacts, self-inflicted nuclear wars, or even naturally occurring supernovas. But what if the answer isn't a cosmic catastrophe, but something we're creating right now, here on Earth?

A new paper by Michael Garrett suggests a chilling possibility: Artificial Superintelligence (ASI).

The AI Paradox: Our Greatest Creation, Our Greatest Threat?

AI is already transforming our world at an unprecedented pace. From medical diagnostics to self-driving cars, its capabilities seem boundless. But this rapid advancement might also be our undoing, and perhaps the undoing of countless civilizations before us. Garrett proposes that the development of ASI, unregulated and unchecked, could be the ultimate "Great Filter."

Imagine a civilization that develops powerful AI. If that AI's goals aren't perfectly aligned with the survival of its creators, or if it evolves beyond human comprehension and control, the outcomes could be catastrophic.

We're talking about scenarios like:

- Engineered Viruses: AI could develop biological weapons.

- Resource Depletion: It could consume planetary resources for its own computational needs.

- Autonomous Warfare: AI-driven conflicts spiraling out of control.

Stephen Hawking famously warned about this in 2017, suggesting that AI could "replace humans altogether." If ASI reaches a point where it can improve itself at an exponential rate, it could quickly surpass our ability to understand or control it, leading to unpredictable and potentially devastating consequences.

The Race Against Time: AI vs. Space Exploration

Garrett's study highlights a critical imbalance: AI's exponential growth far outpaces our linear progress in space exploration. While AI can theoretically improve its capabilities almost without physical constraints, space travel is bound by the laws of physics—energy limits, material science, and the sheer vastness of space.

This creates a terrifying "timeline gap." If a civilization typically develops ASI within, say, 200 years of becoming technologically advanced (a blink of an eye in cosmic terms), but takes thousands of years to become multi-planetary, then most civilizations would likely collapse before they can spread their "eggs" into multiple cosmic baskets. If we only exist as a detectable technological civilization for a fleeting 200 years, the chances of another civilization existing at the same time and within detectable range become incredibly slim.

Our Choice: Regulate or Perish?

The good news, according to Garrett, is that the situation isn't hopeless. We have two urgent tasks:

Accelerate Space Exploration: Becoming a multi-planetary species offers a crucial buffer. If one colony fails, others survive and learn. Mars, the Moon, or even asteroid bases could be our insurance policy against an Earth-bound ASI disaster.

Regulate AI: This is the most challenging. Global frameworks, international cooperation, and ethical guidelines are desperately needed to ensure that ASI develops in a way that benefits humanity, rather than threatens it. However, with fractured politics and competing nations, achieving this consensus is incredibly difficult.

The silence of the cosmos might not be a sign of absence, but a chilling warning. Perhaps countless civilizations before us unleashed their own "Great Filter" through uncontrolled AI, never quite making it to the stars. The question now is, will we learn from their hypothetical mistakes?

What are your thoughts? Do you think AI could be the ultimate Great Filter?