Artificial intelligence has made incredible leaps in recent years. Models like GPT-3 can write full essays, solve complex math problems, generate computer code, and hold conversations that feel surprisingly natural. But a major question still remains:

How close are these AI systems to actually thinking like humans?

To explore this, researchers at the Max Planck Institute for Biological Cybernetics in Tübingen decided to examine GPT-3 the same way psychologists study human minds. And the results were fascinating.

🧠 Testing GPT-3 Like a Human:

Psychologists Marcel Binz and Eric Schulz designed a series of experiments to evaluate GPT-3’s general intelligence. These weren’t technical tests — they were classic psychological assessments used for measuring human reasoning.

Beyond Big Tech.

Private AI.

24/7 phone answering on your own dedicated server. We compute, we don't train. Your data stays yours.

Start Free DemoThey tested the model on:

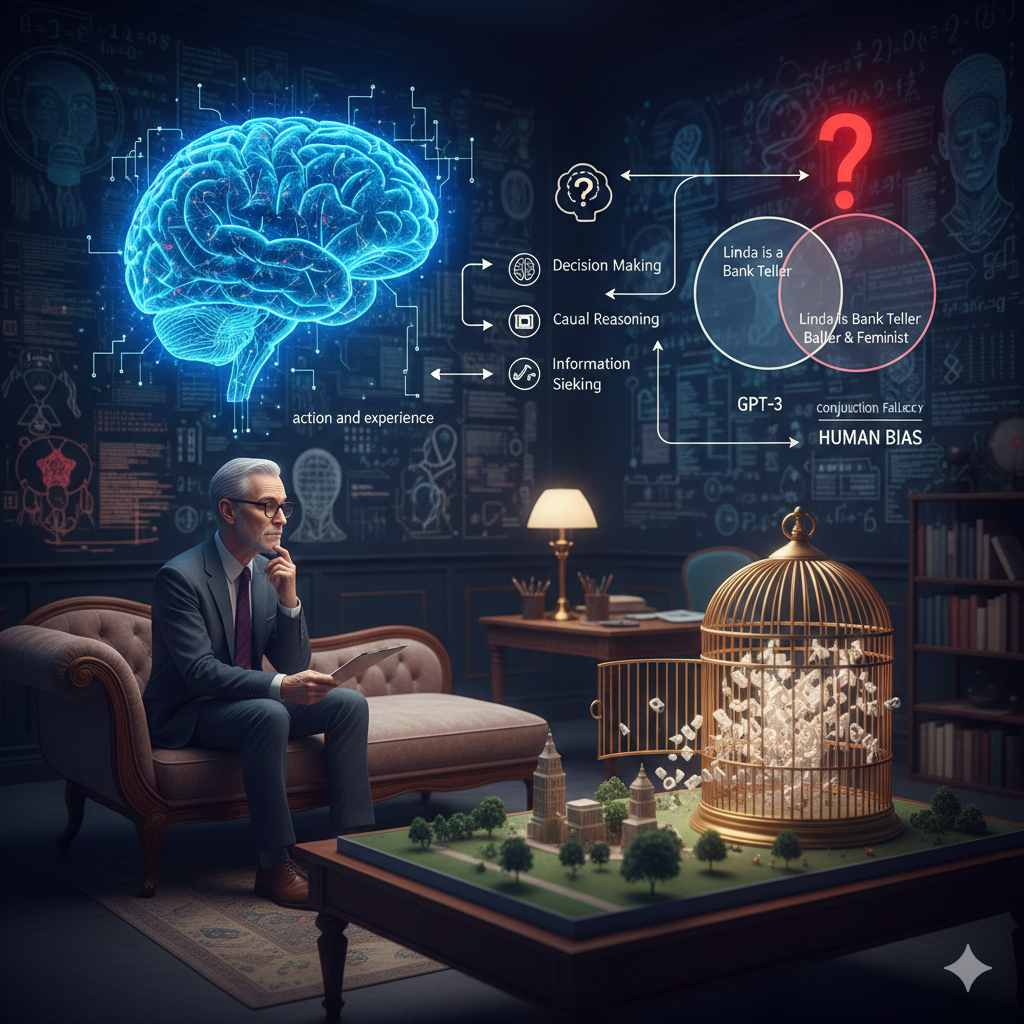

- Decision-making.

- Causal reasoning.

- Information-seeking behavior.

- Ability to revise earlier judgments.

GPT-3’s responses were then compared with answers from human participants.

One of the key experiments involved the famous Linda problem, a well-known psychology test. In this scenario, participants read about a woman named Linda who is socially aware and outspoken. They must then choose which of the following is more likely:

- Linda is a bank teller.

- Linda is a bank teller and a feminist. Most humans choose the second option — even though logically it can’t be more likely than the first. Surprisingly, GPT-3 made the same logical mistake, proving that it doesn’t just copy human reasoning… it also copies human biases.

🔍 Beyond Memorization: Can GPT-3 Really Think?

Some people might say GPT-3 performs well because it has simply memorized famous problems from the internet. To rule this out, the researchers created fresh, unseen tasks similar to the Linda problem.

What they found was clear:

✔ GPT-3 could still match human decision-making in many cases. ✘ But it struggled with tasks requiring causal reasoning or active information searching.

And this highlights a key limitation:

- GPT-3 learns only from text. It has never interacted with the real world.

- Humans learn through action, experience, and trial-and-error — something AI models still do not possess.

🚀 What This Means for AI’s Future:

The study shows that large language models can imitate many patterns of human reasoning, including our mistakes. But they still lack the deeper, flexible intelligence that comes from real-world experience.

100% Data Sovereignty.

Own Your AI.

Custom AI agents built from scratch. Zero external data sharing. Protect your competitive advantage.

View ServicesTo truly reach human-level cognition, AI may need the ability to interact with its environment — not just read about it.

Still, as models like GPT-3 engage with millions of people daily, these interactions might help them evolve toward more human-like thinking over time.

Binz and Schulz summarize it well:

GPT-3 is powerful — sometimes even on par with humans — but it remains a fundamentally different kind of mind.