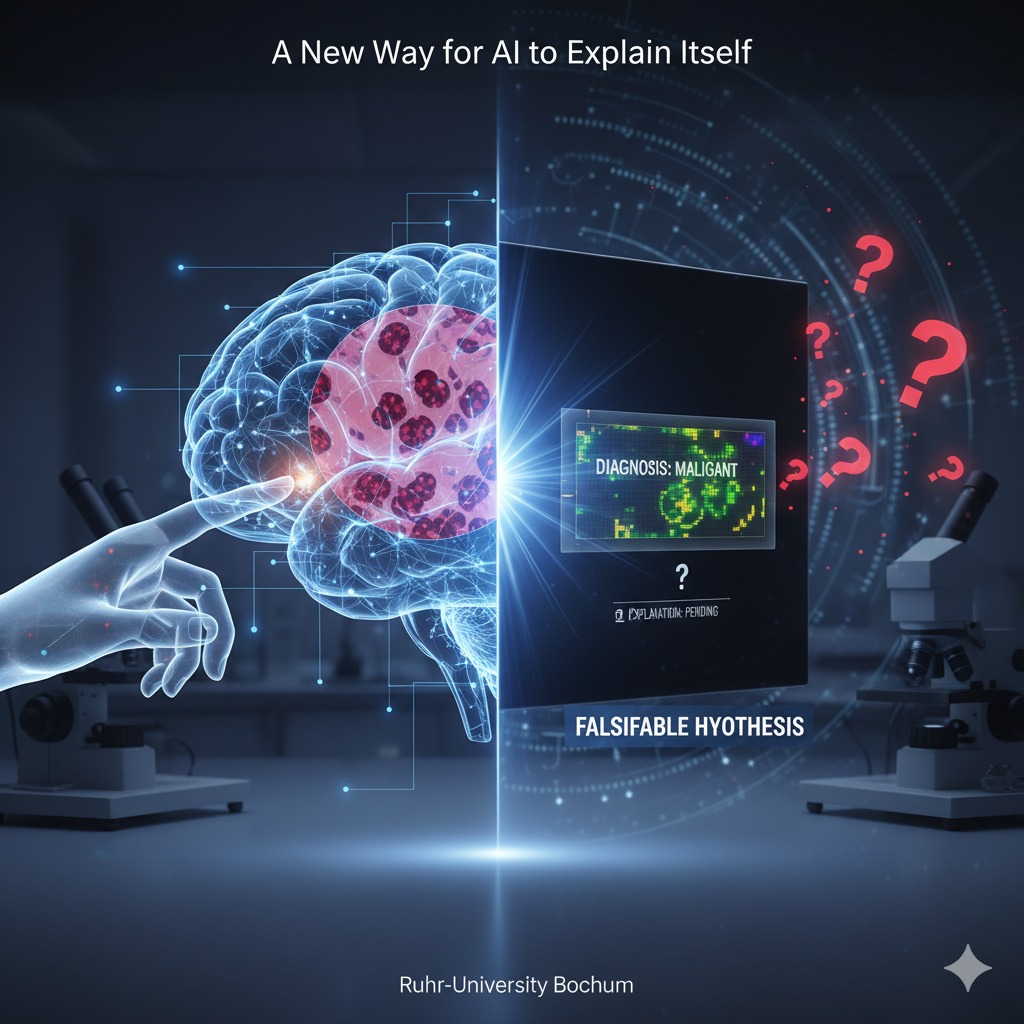

Artificial intelligence (AI) is already being used to help with medical diagnoses, like identifying tumors in tissue samples. But a major problem is that these AI systems can't explain how they reach their conclusions. This lack of transparency can make it difficult to trust the results, especially for critical applications in medicine.

A team of researchers at Ruhr-University Bochum has developed a new approach to make AI's decisions transparent and trustworthy. Their research was published on August 24, 2022, in the journal Medical Image Analysis.

Moving Beyond the "Black Box"

The research team, led by Professor Axel Mosig, created a neural network—a type of AI—that can classify whether a tissue sample contains a tumor or not6. They trained the AI using a large collection of microscopic images of tissue, some with tumors and some without7. Typically, neural networks are like a "black box". It's not clear what specific features the network learns from the data to make its decisions9. This is different from a human expert who can explain their reasoning.

A Scientific Approach to AI

To solve this problem, the Bochum team based their AI on a scientific principle: falsifiable hypotheses11. In science, a hypothesis must be something that can be proven false through an experiment.

Beyond Big Tech.

Private AI.

24/7 phone answering on your own dedicated server. We compute, we don't train. Your data stays yours.

Start Free DemoTraditional AI often uses inductive reasoning, where it creates a general model based on specific observations from its training data13. A classic example of the problem with this is the "black swan" theory: no matter how many white swans you see, you can't prove that black swans don't exist. Science, on the other hand, uses deductive logic, starting with a general hypothesis that can be disproven by a single observation15.

The researchers found a way to bridge this gap. Their new neural network not only classifies a tissue sample but also creates an activation map. This map highlights the areas in the image that the AI identifies as a tumor.

The key is that this activation map is based on a falsifiable hypothesis: that the activated regions on the map correspond exactly to the tumor areas in the sample. Scientists can then use molecular methods to test this hypothesis and confirm the AI's findings. This new approach makes the AI's reasoning visible and verifiable, paving the way for more trustworthy and transparent AI in medicine.